By Gary D. Robson

By Gary D. Robson

Closed captioning has been available for more than four decades, but the author takes on the question of whether the current state of captioning still offers people with hearing impairments the access they deserve.

A quick background

Television broadcasts began in the United States in 1928. It would be more than 40 years before closed captioning debuted for deaf audiences, and it was 60 years before we had descriptive video service for people who are blind.

When captioning was finally rolled out, it was done by a small group of people who were highly dedicated to opening the world of television to deaf audiences. They really cared about what they were doing and how it affected people. They did readability and usability studies. Former court reporter and JCR editor Benjamin Rogner did his Ph.D. dissertation on the use of closed captioning to combat adult illiteracy. Several past presidents of NCRA were pioneers in realtime captioning.

When we are doing something because it’s the right thing to do, we tend to put a lot of work into doing it right. When we are doing it because we’re forced to, human nature drives us to do the least we can get away with. All of the laws mandating closed captioning have increased the quantity of captions, but the focus and quality have suffered as a result.

I remember the thrill of standing in a captioning studio at CaptionAmerica (which later became VITAC) in 1988 as NBC’s Today show was being captioned. A team of people had been prepping for well over an hour, and a coordinator and tech were standing by as the stenocaptioner wrote the show. The coordinator threw in global changes as required, moved the captions if they were covering anything important, and fed through any pre-scripted segments.

The result was clean, readable, and fast — and that was typical of what was being produced at captioning companies like the Caption Center at WGBH and the National Captioning Institute. It was also significantly better than what we’re watching on most network broadcasts today, more than 25 years later.

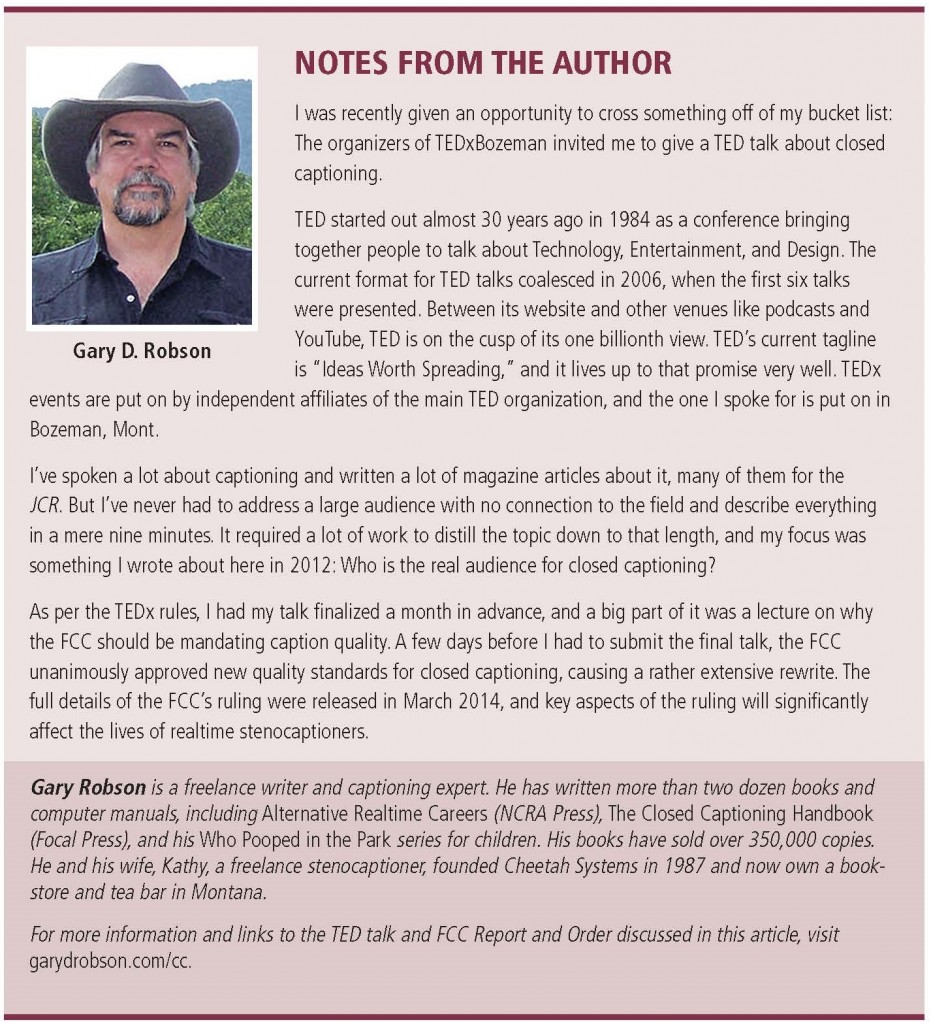

That current quality of captions, and what we can do to fix it, became the focus of my TED talk.

Defining quality

Defining quality

We’ve had quality initiatives for as long as we’ve had captioning. And that entire time, we’ve struggled to agree on how to put a numeric score on caption quality. It’s a thorny problem.

The usability of captions cannot be rated by measuring misstrokes and drops. Dropping the word “really” from “I really don’t like salmon” simply isn’t the same as dropping the word “not” from “I did not do it.” Measuring how much each word affects the meaning of the sentence is an impossibly complex task when judging a stack of CBC or CRR exams, though. But even if we were to solve that problem, it isn’t enough.

Casual caption users — hearing people like myself, for example — are mostly concerned about the text being there so that we can fill in gaps if we don’t hear something. For viewers who are deaf and hard of hearing, caption quality is synonymous with understandability. Captioning was created to allow people who can’t hear what’s going on to see what’s going on instead. If the captions don’t help a viewer who is deaf to understand the program as a hearing person could, then the captions have failed.

With the March 2014 rulings, the FCC has spelled out four critical components that comprise quality. The implementation of those standards for realtime captioning isn’t the same as for offline (scripted) captioning, but they still represent a dramatic change from where we are today. To directly quote the Report and Order:

“In evaluating a VPD’s compliance with the captioning quality standards, the Commission will consider the challenges in captioning live programming, such as the lack of an opportunity to review and edit captions before the programming is aired on television. Notwithstanding these challenges, however, measures can be taken to ensure that captioning of live programming is sufficiently accurate, synchronous, complete, and appropriately placed to allow a viewer who depends on captioning to understand the program and have a viewing experience that is comparable to someone listening to the sound track.”

The FCC’s four critical components are accuracy, synchronization, completeness, and placement. Let’s look at each one individually.

Accuracy

“To be accurate, captions must reflect the dialogue and other sounds and music in the audio track to the fullest extent possible based on the type of the programming…”

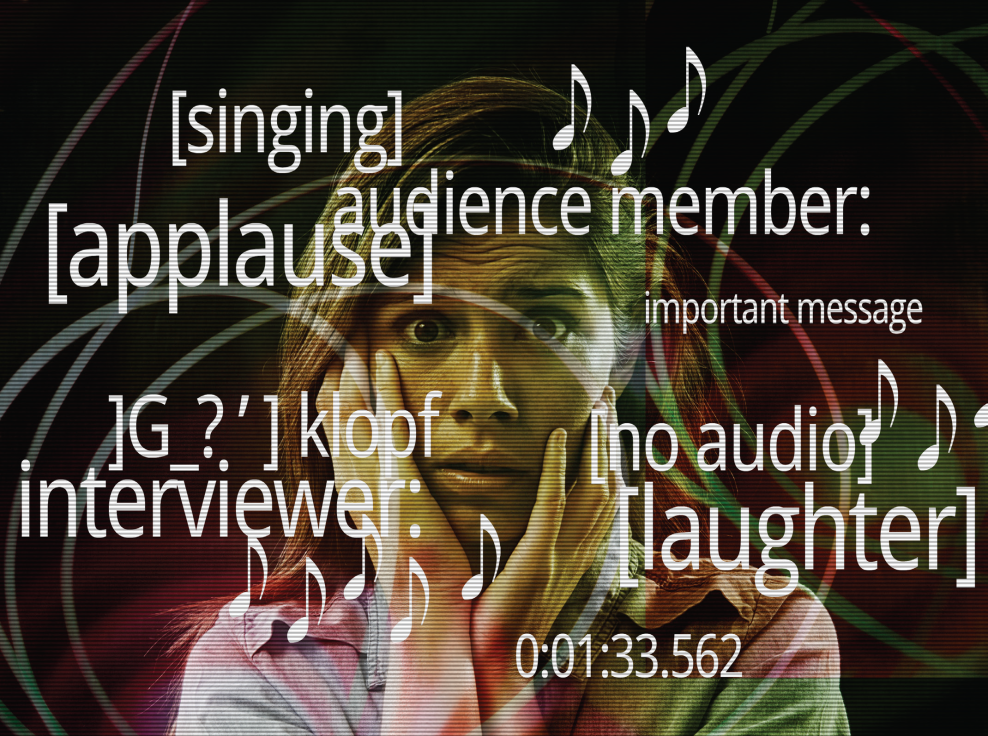

This reflects what a professional realtime captioner does already. If captioning stops, a deaf viewer can’t tell the difference between a technical problem and simply not having any dialogue to caption. This is why every captioner has a stroke to include one-line bracketed captions like [applause], [no audio], or [laughter]. This is why every realtime captioning system since 1989 has allowed for the inclusion of music notes

in the captions. Nothing here represents a significant change for captioners. But the last five words of the paragraph do:

“…and must identify the speakers.”

Proper speaker identification is difficult under the best of situations, but it’s nearly impossible without the cooperation of the video producer. Back when I got involved in captioning in the late 1980s, captioners were usually given access to newsroom computer systems to review stories in advance.Security concerns have caused many news organizations to remove that access, and live captioning has spread far beyond news. In addition, most captioners aren’t paid for prep time anymore (just one aspect of the dramatic decline in captioner pay over the past few decades), so they don’t have a high incentive to research everyone who might speak during a live broadcast.

Implementing this aspect of the ruling is going to require broadcasters to work with captioners in providing names in advance. Captioners will not be able to get away with simply inserting “>>>” to indicate a change in speaker. If they don’t have the speaker’s name, they’ll have to use generics like “audience member,” “witness,” or “interviewer.”

Synchronization

Synchronization

“In order to be synchronous, captions must coincide with their corresponding dialogue and other sounds to the fullest extent possible based on the type of the programming and must appear at a speed that can be read by viewers.”

This is where we’ve seen some of the worst declines in quality. I’ve recently measured delays on major network broadcasts of more than 12 seconds between the audio and the captions. Try watching TV with the sound delayed 12 seconds behind the video, and you’ll see why this is so hard to follow for people who are deaf.

Some of this delay is inevitable, but some actually is under the control of the captioner or captioning company. What can the captioner do to reduce delays?

1) The captioner should get audio over the phone from master control. Digital TV encoders introduce a delay. Even at the speed of light, bouncing a signal off of a satellite in a geostationary orbit 26,000 miles above the earth takes time. But terrestrial phone signals are fast. If the captioner can get the audio before it goes to encoding and transmission, the captioner shaves several seconds off of when the captioner heard it on the TV set.

2) If the captioner’s captioning system has a programmable “hold” that delays sending out the captions, turn it off. This is a nice way to let the captioner make corrections before errors make it to air, but it also introduces dramatic delays in the caption text and makes it harder for people who are deaf to follow.

The captioner can also help with the readability by increasing the amount of text on the screen. Quite a few broadcasters and captioning companies are now using only two short lines of captions, especially for sportscasts. When the captioner writes a quick burst of text for a fast-talking announcer, those two lines can scroll by before anyone could possibly read them.

A final thought on the delays: If the captioner’s captions are running 12 seconds behind, then when the station cuts to commercial, the last 12 seconds of captioning will be lost. This leads us into the FCC’s third component of captioning quality.

Completeness

“For a program’s captions to be complete, they must run from the beginning to the end of the program, to the fullest extent possible, based on the type of the programming.”

This will have very little effect on realtime captioners, as the job already involves writing everything that’s said.

It will, however, have a large effect on newscasts in small-market television stations. It is legal today for such stations to use TelePrompTer-based captions from their newsroom computer, and many of them do just that, leaving all unscripted segments without captions.

It is possible, however, that the amount of work involved in bringing themselves into compliance with new caption quality standards will cause a lot of these stations to just hire realtime captioners instead, thus generating some new jobs.

Placement

“For proper placement, captions may not cover up other important on-screen information, such as character faces, featured text, graphics, or other information essential to the understanding or accessing of a program’s content.”

This is another area that has gotten dramatically worse over the past 25 years. It is common to have captions covering titles, graphics, names, weather maps, and other critical elements on the screen. In most cases, however, it isn’t the captioner’s fault.

Many captioners today work from an audio feed, without being able to see the broadcast they’re captioning. They have absolutely no idea what their captions might be covering. In addition, some broadcasters are concerned that captions “jumping around the screen” could be distracting to viewers, so they’ve forbidden the captioners from moving the captions.

As if that isn’t bad enough, the captions appear different depending upon the viewer. TV sets are available in a variety of aspect ratios, as are broadcasts. TVs give the option to stretch a picture to fit, expand it so that the edges of the picture aren’t visible, or show black bars at the sides (or top and bottom, depending on the situation). Each of the options puts the captions over a different part of the picture.

The issues raised by mandated quality aren’t easy ones to solve. They will require a closer working relationship between broadcasters and captioners and a deeper understanding of the challenges involved. The new ruling will also help to refocus us on the original purpose of closed captioning.

Good captions benefit everyone. They can help children to read, help us follow TV programs in noisy bars and gyms, and aid in battling adult illiteracy. But they are much more than that to people who are deaf. Captions can save lives in an emergency broadcast, telling viewers who are deaf when they need to evacuate their homes or what roads to avoid. The technology that’s used for captioning is also used for CART in educational and business settings.

Captioning has been around for more than 30 years now. This is our opportunity to retune, refocus, and remember who closed captions were created for in the first place.